After a series of sputtering starts, it finally looks like all the puzzle pieces are falling into place for the rise of smart glasses as the next great consumer technology.

All the puzzle pieces, that is, except one, and that one could stay forever lost.

Dramatic progress has been made in important technologies that make smart glasses a usable, and even attractive, proposition.

The term “smart glasses” is a little vague, so let’s be clear. We’re not talking about virtual reality headsets. We’re not talking about glasses with electronically controlled adaptive lenses or filters, either. We’re talking about wearable computers in the form of – and not much bulkier than – an ordinary pair of spectacles or shades.

To qualify, it must:

- Have the ability to place visual information in front of at least one of the user’s eyes;

- Feature at least one camera for photos, videos or computer vision;

- Have the ability to respond to voice commands or physical gestures or both; and

- Be networked, either to a nearby smartphone or directly to Wi-Fi or cellular services, and preferably both.

It might optionally:

- Produce sound audible only by the wearer;

- Have its display capability extend to creating an augmented-reality overlay;

- Include any number of other sensors, transmitters and receivers; and

- Run any number of potentially useful applications, many of which would otherwise run on a smartwatch, smart wristband or a smartphone, or might only be possible on smart glasses.

If science-fiction is invention, as Hugo Gernsback (after whom the genre’s Hugo Awards are named) maintained, then the inventor of augmented reality glasses is L Frank Baum.

Read: How 3D wowed the world, then flopped again and again

More famous as the author of The Wonderful Wizard of Oz, Baum in 1901 wrote The Master Key, with the unwieldy (and outmoded) subtitle, An Electrical Fairy Tale Founded Upon the Mysteries of Electricity and the Optimism of Its Devotees. It was Written for Boys, But Others May Read It.

In it, he envisions a pair of spectacles he called a “Character Marker”.

…article continues below…

“While you wear them,” Baum wrote, “everyone you meet will be marked upon the forehead with a letter indicating his or her character. The good will bear the letter ‘G’, the evil the letter ‘E’. The wise will be marked with a ‘W’ and the foolish with an ‘F’. The kind will show a ‘K’ upon their foreheads and the cruel a letter ‘C’. Thus you may determine by a single look the true natures of all those you encounter.”

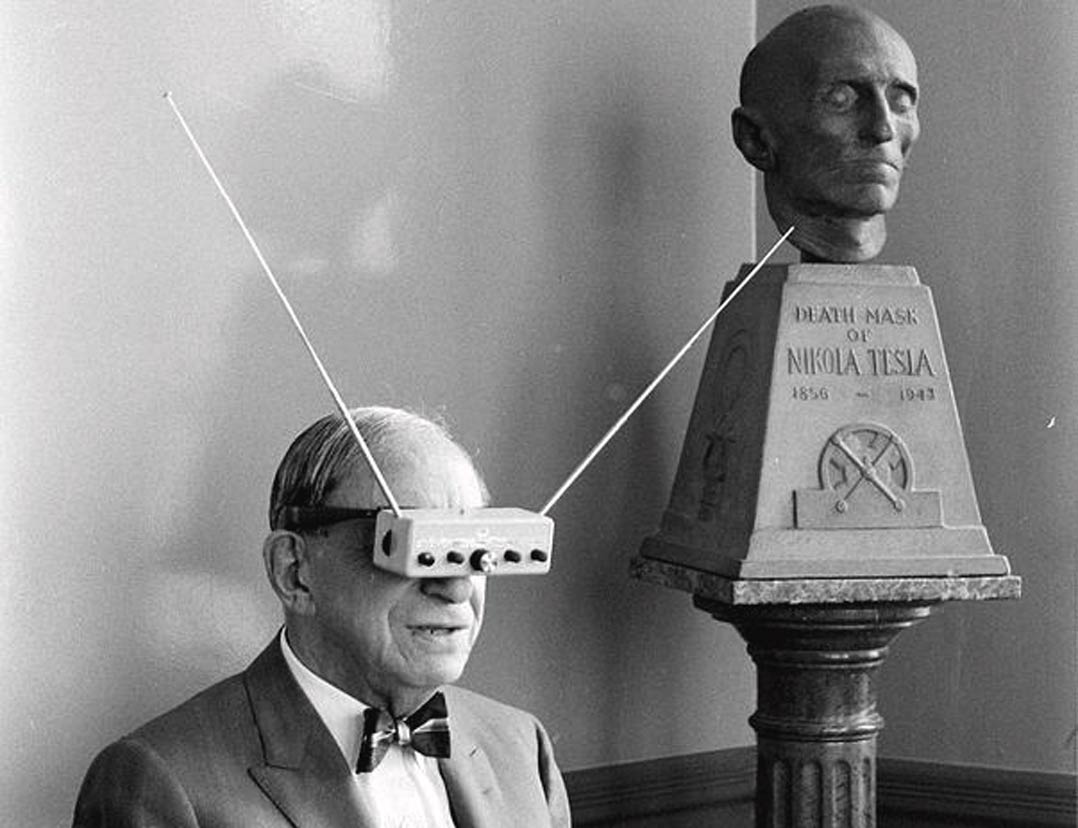

Real-world head-mounted display systems date back to at least 1960. Gernsback himself demonstrated a prototype of a stereoscopic, head-mounted display based on dual cathode-ray tubes to Life magazine in 1963.

Gernsback called them “TV glasses”. Life called them number 26 in a list of 30 Dumb Inventions.

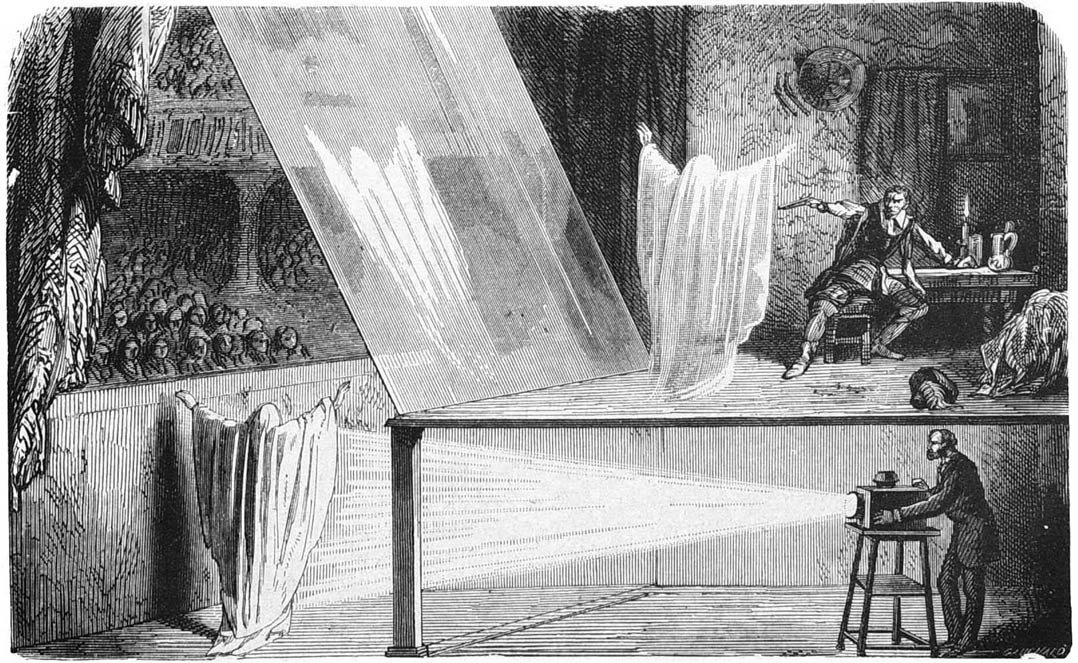

Meanwhile, the technology for head-up displays – based essentially on a windshield-sized Pepper’s Ghost mechanism – was being pioneered for military fighter jet cockpits, and occasionally appeared in automotive concept vehicles.

If anyone can take credit for being the true pioneer of smart glasses, it is a super-nerd named Steve Mann. He devoted his life to the project of actually wearing prototype wearable computers, long before the term entered the mainstream lexicon.

He built his first prototype back in 1978, when he was just 16, and has been designing, and wearing, improved versions ever since. Mann’s initial idea was not to augment reality (a term that would be coined only many years later), but to enhance it.

He was inspired as a child when his grandfather taught him to weld. Welding masks darken the view for a welder, to prevent retinal damage caused by the brightness of the electrical arc. It darkens the view uniformly, however, which has the drawback that the workpiece becomes harder to see, too.

Mann thought a computer, using video cameras and a screen, would be better at filtering the view in real time, reducing the dynamic range of the image so that the bright arc would not be blinding, while the darker weld and workpiece would still be clearly visible.

“I started exploring various ways to do this during my youth in the 1970s, when most computers were the size of large rooms and wireless data networks were unheard of,” he related in an article for the IEEE’s Spectrum magazine in 2013. “The first versions I built sported separate transmitting and receiving antennas, including rabbit ears, which I’m sure looked positively ridiculous atop my head. But building a wearable general-purpose computer with wireless digital communications capabilities was itself a feat. I was proud to have pulled that off and didn’t really care what I looked like.”

‘Mediated reality’

Mann called his experiments “mediated reality”, as opposed to “augmented reality”. Although his various smart glass prototypes were able to overlay digital images into his sightline, various sensors were also able to enhance his vision.

His dynamic range filter, originally used for welding, can also be used in photography, or to enhance night vision while driving. Mann claims to be able to see the face of a driver in an oncoming car even at night when their headlights would hamper his unassisted vision.

Read: The colossal carbon capture con

Another design used infrared cameras, not only to improve night vision, but also to overlay subtle heat signatures onto his visual field. He developed apps to improve his ability to read text in the real world, and to superimpose map details on reality.

He called his line of wearable devices “EyeTap Digital Eye Glass”.

…article continues below…

It wasn’t until 35 years after Mann’s original prototype, however, that tech companies advanced the state of the art sufficiently to be able to float concept models of consumer-orientated smart glasses at technology shows.

Among the early movers were Oakley, a sunglasses brand which held hundreds of patents related to projecting images onto lenses, and Canon, which made extraordinarily expensive “mixed reality” systems for professional use, costing US$125 000 plus $25 000 per year.

Vergence Labs released Epiphany Eyewear to developers in May 2011, and to consumers two years later.

Epson released the Moverio BT-100 in November 2011, claiming they were the world’s first standalone binocular consumer smart glasses to offer audio-visual content via wireless connectivity. Unlike the Epiphany product, however, Epson’s glasses could not pass for ordinary spectacles of any kind.

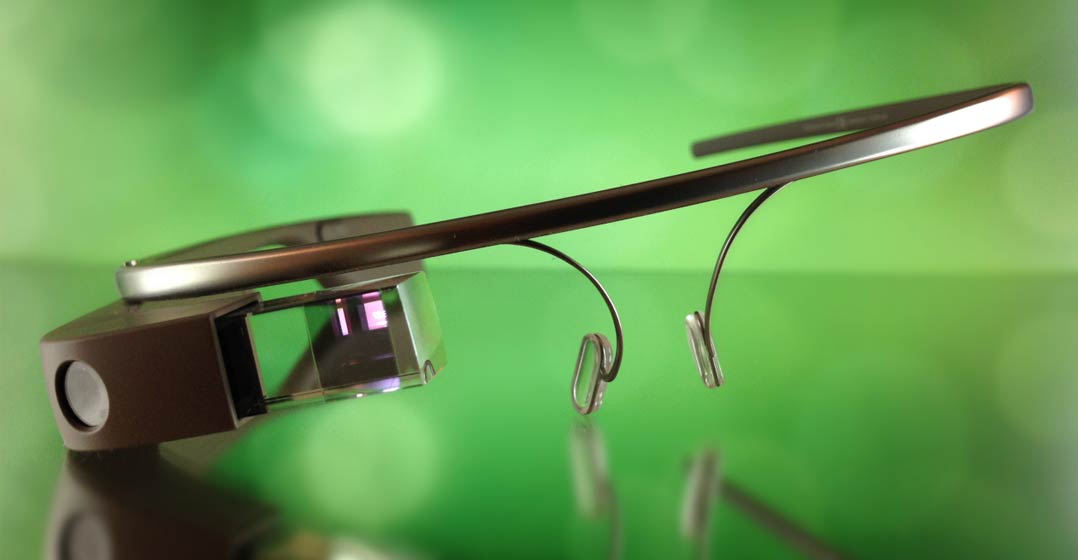

These fully realised smart glasses, in production in 2011, contrast with the prototype for Google smart glasses product, still kept under wraps in a lab at the company’s skunkworks division. In 2011, it weighed 3.8kg.

In 2012, Google began pre-selling units for what it called Google Glass. It is unclear whether the company copied Mann’s homework, or came up with the singular “Glass” moniker independently.

Read: The most overhyped but underwhelming product in history

It aimed the first iterations of Google Glass at what it called “Glass Explorers”, which were really just paying beta testers. These initial units were only shipped a year later, in 2013.

Google Glass was finally released to consumers in April 2014, by which time they were lighter than a pair of sunglasses, though not more stylish.

Although Google was the heavyweight that made everyone sit up and take notice of smart glasses, it not only wasn’t first to market, but its product was fairly limited. It had a single front-facing camera, responded to voice commands and transmitted audio via a bone-conduction speaker against the temple. It was fully networked, of course, and could run basic apps like maps and calendars.

Instead of an augmented-reality overlay display, however, Google Glass placed a small video screen in front of and above the user’s right eye. This was emphasised by the fact that Google Glass didn’t require any actual lenses at all (though lenses could be fitted, and they could be prescription lenses).

This approach had the drawback that it doesn’t account for the vergence-accommodation conflict – the mismatch between where the eye focuses and where the brain thinks an image really is, which can cause eyestrain and symptoms similar to motion sickness.

Steve Mann claimed to have solved these problems with his own approach, and expressed disappointment that Google and other manufacturers new to the smart glasses scene had taken an easier path, at the cost of potential eye strain and injury.

Another major issue with Google Glass was the safety impact of distracting images in someone’s visual field, especially while driving or in other situations where undistracted attention was necessary (or simply polite).

Users also had issues with the unintuitive interface, limited battery life and unpolished hardware package.

The most important criticism of Google Glass, and of smart glasses in general, however, was also identified first by Mann.

Creepy

While wearing his EyeTap Digital Eye Glass on a holiday to Paris, his all-American McDonald’s meal was interrupted by an assailant who tried to rip the glasses off his face, before pushing him out onto the street. Mann tried to show his attacker documentation on the device, but the attacker and two accomplices ripped the papers up.

The public did not appreciate being surreptitiously and constantly filmed or recorded. They found it creepy.

Two years later, the same thing happened to a Google Glass wearer in San Francisco.

Google Glass users were soon dubbed “Glassholes”. Public venues and workplaces started banning the product, fearing surveillance, misuse and objections from other patrons or employees. Google pulled Glass from the consumer market in 2015.

Instead, it tried to focus on the business market, where use cases were better defined and their use could be better circumscribed. In 2023, however, Google gave up on that market, too, and discontinued the project entirely.

In the intervening decade, technology has advanced tremendously.

Smart glasses have found good use cases in healthcare, manufacturing and as assistive aids for people who are visually or otherwise impaired.

Modern chips

Display technologies like waveguides, that transmit an overlay image through a lens instead of projecting it onto a lens, and systems that can project an image directly onto the retina, have made augmented reality overlays far more sophisticated.

High-performance, low-power chips such as those from Qualcomm have made the circuitry required less bulky and extended battery life. This has allowed companies to design sleeker, far more stylish smart glasses.

Combining camera inputs and other sensors with AI-driven computer vision has opened up a world of new applications. In particular, it has proven very useful to people who are visually impaired, and who can benefit from smart glasses that describe their environment via an audio feed.

There are many other potential applications, in business and industry. The ability to record, summarise, archive and search meetings without the need for taking minutes, for example, is a potential killer application, as is the ability to recognise and describe people in business or social settings.

A number of companies have invested heavily in AI-powered smart glasses. Besides several smaller, specialist start-ups, Facebook owner Meta Platforms has a range available in conjunction with two top fashion brands, Ray-Ban and Oakley. Snap, which owns Snapchat, bought Vergence Labs and now produces a range of smart glasses simply called Spectacles. And Apple is working on a new range of smart glasses that could be launched in 2026 or 2027.

That these companies have built, or will build, excellent products that overcome many of the shortcomings of Google Glass can hardly be in doubt. All the puzzle pieces are falling into place, except one: all these smart glasses depend on cameras.

The question is whether the appeal of new killer applications for smart glasses can overcome the visceral reaction from people who do not want to be filmed or recorded everywhere they go.

Filming in public toilets, or while bending down to pick up a dropped coin, or while glancing up a staircase, or while watching children play in a park, are all part of the “creep factor” of always-on personal cameras.

The notion that everything you say can and will be recorded, to be used against you in evidence should the need arise, will make even casual social encounters like walking on eggs, lest you say the wrong thing.

Risky

There is no easy way to reconcile this conflict. “Camera on” indicators can easily be bypassed or hacked. If smart glasses must be removed in all manner of public places, users will have to carry their protective charging cases with them wherever they go. If they also use prescription lenses in their smart glasses, they will have to carry an additional pair of normal glasses, so that’s two cases.

Read: The great blockchain letdown

Convenience is the be-all and end-all for consumer technology. If using a device isn’t completely intuitive and frictionless, the mass market will lose interest.

There will always be commercial use cases for smart glasses. Steve Mann’s welding use case is not going away. As mass market consumer devices, however, the future for smart glasses looks grim.

Chances are that Google was right to give up on the puzzle. Meta and Apple are counting on the fact that nobody will notice the missing puzzle piece. And that’s a risky bet. — (c) 2025 NewsCentral Media

- Top image: Meta CEO Mark Zuckerberg demonstrating a pair of the company’s new smart glasses

Get breaking news from TechCentral on WhatsApp. Sign up here.